Hi,

I'd like to add a new pass to LLVM which removes redundant profile counter

updates. The goal is to speed up code coverage testing and profile

generation

for PGO.

We may want to have a focused discussion about this goal, rather than a

particular suggestion. There are a lot of things we can do. Off the top of

my head, some are:

1. add some sort of alias annotation (such as an independent TBAA root

node) to all the counter increment memory instructions to tell the

optimizer they don't interfere with the usual loads and stores.

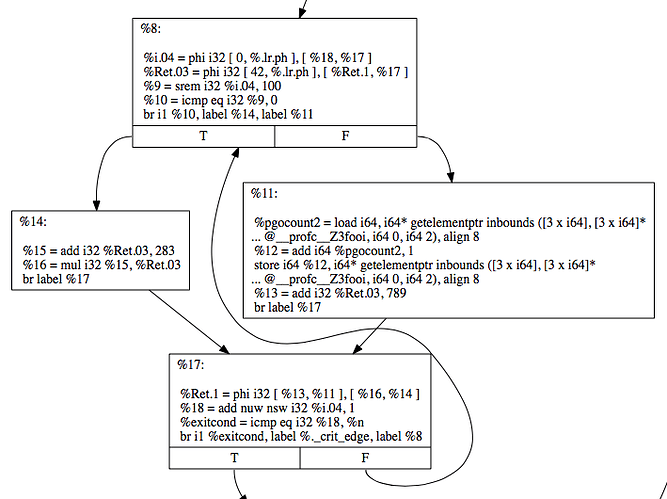

2. communicate to the optimizer that counters can be registerized. In a

loop like:

for (int i = 0; i < N; i++) {

if (foo())

bar();

else

baz();

}

we perform O(N) counter increments (i.e. load, increment, store) last I

checked. However, if the counters are in registers, then we only perform

O(1) memory operations. This can dramatically reduce the pressure on the

CPU's load/store units and also relieve cross-core cache line ping-pong

when two cores are executing the same code. Note that the latter benefit is

attained even if we ultimately end up spilling the counters due to

increased register pressure.

I actually don't know what is preventing the usual optimization pipeline

from getting 2 right.

I'm sending this email out to describe my approach, share some early

results,

and gather feedback.

Problem Overview

A profile counter is redundant if it's incremented in exactly the same

basic

blocks as some other profile counter. Consider the following module:

local void f1() {

instrprof_increment(profc_f1);

}

void f2() {

instrprof_increment(profc_f2);

f1();

}

Once the inliner runs and deletes f1, we're left with:

void f2() {

instrprof_increment(profc_f2);

instrprof_increment(profc_f1);

}

Now we can say profc_f1 is redundant (or, an alias for profc_f2).

I've noticed that frontend-based instrumentation can generate many

redundant

profile counters. This degrades performance and increases code size. We

can

address the problem by getting rid of redundant counter updates. The trick

is

to make sure we get back the same profiles.

Proposed Solution

I propose a pruning pass which takes the following steps:

1. Delete functions with local linkage and only one use, if that use is

in

a profile data record.

These functions are left around by the inliner (it doesn't know that

they're safe to delete). Deleting them reduces code size and

simplifies

subsequent analysis of profile counters.

2. Determine which profile counters are essential.

3. Erase all updates to redundant profile counters.

4. Emit the aliases into a new section in the binary.

Aliases are represented as { Dst: i64*, Src: i64* } tuples. Some

changes

in compiler-rt are required to walk the alias section and fill in the

correct execution counts at program exit time.

This pass needs to be run after the inliner in order to be effective.

The complexity of this pass is O(N*M), where N is the number of profile

counters, and M is the average number of updates per counter. In practice

it is

a bit faster, since we can skip the analysis of counters which are

discovered to

be redundant early on in the process.

I think a conceptually simpler design is something like:

for each CFG edge:

record which FE counters have ended up associated with it

remove FE counters

run IR instrumentation pass

emit a side table mapping IR instr counters to FE counters (more generally:

how to reconstruct FE counters from the IR counters)

The problem is simply reduced to the IR instrumentation pass.

Early Results

The pruning pass results in 25% speed improvement in the example program

above

(where f2 is called in a loop 10^8 times).

Here is a slightly less contrived example:

#include <vector>

#include <algorithm>

#include <cstdlib>

static void escape(void *p) {

asm volatile("" : : "g"(p) : "memory");

}

int main(int argc, char **argv) {

std::vector<int> V(atoi(argv[1]));

escape(reinterpret_cast<void *>(V.data()));

std::sort(V.begin(), V.end());

return V[0];

}

I get the following results on my desktop (10^8 elements, 5 runs each):

O3: 0.262s

O3 + PGOInstr: 0.663s

O3 + PGOInstr + Pruning: 0.606s (8.6% performance win, 672 aliases)

O3 + CoverageInstr: 0.690s

O3 + CoverageInstr + Pruning: 0.610s (11.6% performance win, 688 aliases)

Next Steps?

Is the performance of instrumented code something we think we need to fix?

With frontend instrumentation it definitely is.

What's an acceptable compile-time overhead for running this pruning pass?

Instrumented builds are "special" anyway so a fair slowdown is probably

acceptable. I.e. this doesn't affect regular developer compilation times.

As a baseline, maybe compare compile time of `-fprofile-instr-generate -O2`

vs. `-O2`. If `-fprofile-instr-generate -O2` is 10% slower than the

control, then that indicates that a 5% extra slowdown is probably

reasonable for a substantial reduction in profiling overhead (which can

result in a qualitative improvement to the actual usability of the

instrumented program).

-- Sean Silva