First of all, sorry for the long mail.

Inspired by the excellent analysis Rui did for lld, I decided to do

the same for llvm.

I'm personally very interested in build-time for LTO configuration,

with particular attention to the time spent in the optimizer.

Rafael did something similar back in March, so this can be considered

as an update. This tries to include a more accurate high-level

analysis of where llvm is spending CPU cycles.

Here I present 2 cases: clang building itself with `-flto` (Full), and

clang building an internal codebase which I'm going to refer as

`game7`.

It's a mid-sized program (it's actually a game), more or less of the

size of clang, which we use internally as benchmark to track

compile-time/runtime improvements/regression.

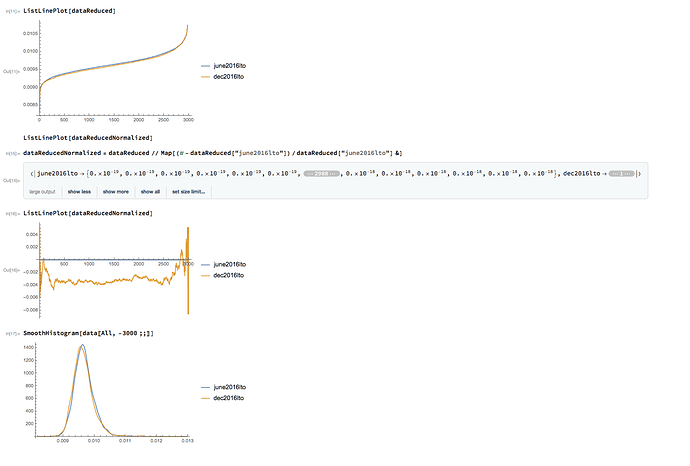

I picked two random revisions of llvm: trunk (December 16th 2016) and

trunk (June 2nd 2016), so, roughly, 6 months period.

My setup is a Mac Pro running Linux (NixOS).

These are the numbers I collected (including the output of -mllvm -time-passes).

For clang:

June 2nd:

real 22m9.278s

user 21m30.410s

sys 0m38.834s

Total Execution Time: 1270.4795 seconds (1269.1330 wall clock)

289.8102 ( 23.5%) 18.8891 ( 53.7%) 308.6993 ( 24.3%) 308.6906 (

24.3%) X86 DAG->DAG Instruction Selection

97.2730 ( 7.9%) 0.7656 ( 2.2%) 98.0386 ( 7.7%) 98.0010 (

7.7%) Global Value Numbering

62.4091 ( 5.1%) 0.4779 ( 1.4%) 62.8870 ( 4.9%) 62.8665 (

5.0%) Function Integration/Inlining

58.6923 ( 4.8%) 0.4767 ( 1.4%) 59.1690 ( 4.7%) 59.1323 (

4.7%) Combine redundant instructions

53.9602 ( 4.4%) 0.6163 ( 1.8%) 54.5765 ( 4.3%) 54.5409 (

4.3%) Combine redundant instructions

51.0470 ( 4.1%) 0.5703 ( 1.6%) 51.6173 ( 4.1%) 51.5425 (

4.1%) Loop Strength Reduction

47.4067 ( 3.8%) 1.3040 ( 3.7%) 48.7106 ( 3.8%) 48.7034 (

3.8%) Greedy Register Allocator

36.7463 ( 3.0%) 0.8133 ( 2.3%) 37.5597 ( 3.0%) 37.4612 (

3.0%) Induction Variable Simplification

37.0125 ( 3.0%) 0.2699 ( 0.8%) 37.2824 ( 2.9%) 37.2478 (

2.9%) Combine redundant instructions

34.2071 ( 2.8%) 0.2737 ( 0.8%) 34.4808 ( 2.7%) 34.4487 (

2.7%) Combine redundant instructions

25.6627 ( 2.1%) 0.3215 ( 0.9%) 25.9842 ( 2.0%) 25.9509 (

2.0%) Combine redundant instructions

Dec 16th:

real 27m34.922s

user 26m53.489s

sys 0m41.533s

287.5683 ( 18.5%) 19.7048 ( 52.3%) 307.2731 ( 19.3%) 307.2648 (

19.3%) X86 DAG->DAG Instruction Selection

197.9211 ( 12.7%) 0.5104 ( 1.4%) 198.4314 ( 12.5%) 198.4091 (

12.5%) Function Integration/Inlining

106.9669 ( 6.9%) 0.8316 ( 2.2%) 107.7984 ( 6.8%) 107.7633 (

6.8%) Global Value Numbering

89.7571 ( 5.8%) 0.4840 ( 1.3%) 90.2411 ( 5.7%) 90.2067 (

5.7%) Combine redundant instructions

79.0456 ( 5.1%) 0.7534 ( 2.0%) 79.7990 ( 5.0%) 79.7630 (

5.0%) Combine redundant instructions

55.6393 ( 3.6%) 0.3116 ( 0.8%) 55.9509 ( 3.5%) 55.9187 (

3.5%) Combine redundant instructions

51.8663 ( 3.3%) 1.4090 ( 3.7%) 53.2754 ( 3.3%) 53.2684 (

3.3%) Greedy Register Allocator

52.5721 ( 3.4%) 0.3021 ( 0.8%) 52.8743 ( 3.3%) 52.8416 (

3.3%) Combine redundant instructions

49.0593 ( 3.2%) 0.6101 ( 1.6%) 49.6694 ( 3.1%) 49.5904 (

3.1%) Loop Strength Reduction

41.2602 ( 2.7%) 0.9608 ( 2.5%) 42.2209 ( 2.7%) 42.1122 (

2.6%) Induction Variable Simplification

38.1438 ( 2.5%) 0.3486 ( 0.9%) 38.4923 ( 2.4%) 38.4603 (

2.4%) Combine redundant instructions

so, llvm is around 20% slower than it used to be.

For our internal codebase the situation seems slightly worse:

`game7`

June 2nd:

Total Execution Time: 464.3920 seconds (463.8986 wall clock)

88.0204 ( 20.3%) 6.0310 ( 20.0%) 94.0514 ( 20.3%) 94.0473 (

20.3%) X86 DAG->DAG Instruction Selection

27.4382 ( 6.3%) 16.2437 ( 53.9%) 43.6819 ( 9.4%) 43.6823 (

9.4%) X86 Assembly / Object Emitter

34.9581 ( 8.1%) 0.5274 ( 1.8%) 35.4855 ( 7.6%) 35.4679 (

7.6%) Function Integration/Inlining

27.8556 ( 6.4%) 0.3419 ( 1.1%) 28.1975 ( 6.1%) 28.1824 (

6.1%) Global Value Numbering

22.1479 ( 5.1%) 0.2258 ( 0.7%) 22.3737 ( 4.8%) 22.3593 (

4.8%) Combine redundant instructions

19.2346 ( 4.4%) 0.3639 ( 1.2%) 19.5985 ( 4.2%) 19.5870 (

4.2%) Post RA top-down list latency scheduler

15.8085 ( 3.6%) 0.2675 ( 0.9%) 16.0760 ( 3.5%) 16.0614 (

3.5%) Combine redundant instructions

Dec 16th:

Total Execution Time: 861.0898 seconds (860.5808 wall clock)

135.7207 ( 15.7%) 0.2484 ( 0.8%) 135.9692 ( 15.2%) 135.9531 (

15.2%) Combine redundant instructions

103.6609 ( 12.0%) 0.4566 ( 1.4%) 104.1175 ( 11.7%) 104.1014 (

11.7%) Combine redundant instructions

97.1083 ( 11.3%) 6.9183 ( 21.8%) 104.0266 ( 11.6%) 104.0181 (

11.6%) X86 DAG->DAG Instruction Selection

72.6125 ( 8.4%) 0.1701 ( 0.5%) 72.7826 ( 8.1%) 72.7678 (

8.1%) Combine redundant instructions

69.2144 ( 8.0%) 0.6060 ( 1.9%) 69.8204 ( 7.8%) 69.8007 (

7.8%) Function Integration/Inlining

60.7837 ( 7.1%) 0.3783 ( 1.2%) 61.1620 ( 6.8%) 61.1455 (

6.8%) Global Value Numbering

56.5650 ( 6.6%) 0.1980 ( 0.6%) 56.7630 ( 6.4%) 56.7476 (

6.4%) Combine redundant instructions

so, using LTO, lld takes 2x to build what it used to take (and all the

extra time seems spent in the optimizer).

As an (extra) experiment, I decided to take the unoptimized output of

game7 (via lld -save-temps) and pass to -opt -O2. That shows another

significant regression (with different characteristics).

June 2nd:

time opt -O2

real 6m23.016s

user 6m20.900s

sys 0m2.113s

35.9071 ( 10.0%) 0.7996 ( 10.9%) 36.7066 ( 10.0%) 36.6900 ( 10.1%)

Function Integration/Inlining

33.4045 ( 9.3%) 0.4053 ( 5.5%) 33.8098 ( 9.3%) 33.7919 ( 9.3%)

Global Value Numbering

27.1053 ( 7.6%) 0.5940 ( 8.1%) 27.6993 ( 7.6%) 27.6995 ( 7.6%)

Bitcode Writer

25.6492 ( 7.2%) 0.2491 ( 3.4%) 25.8984 ( 7.1%) 25.8805 ( 7.1%)

Combine redundant instructions

19.2686 ( 5.4%) 0.2956 ( 4.0%) 19.5642 ( 5.4%) 19.5471 ( 5.4%)

Combine redundant instructions

18.6697 ( 5.2%) 0.2625 ( 3.6%) 18.9323 ( 5.2%) 18.9148 ( 5.2%)

Combine redundant instructions

16.1294 ( 4.5%) 0.2320 ( 3.2%) 16.3614 ( 4.5%) 16.3434 ( 4.5%)

Combine redundant instructions

13.5476 ( 3.8%) 0.3945 ( 5.4%) 13.9421 ( 3.8%) 13.9295 ( 3.8%)

Combine redundant instructions

13.1746 ( 3.7%) 0.1767 ( 2.4%) 13.3512 ( 3.7%) 13.3405 ( 3.7%)

Combine redundant instructions

Dec 16th:

real 20m10.734s

user 20m8.523s

sys 0m2.197s

208.8113 ( 17.6%) 0.1703 ( 1.9%) 208.9815 ( 17.5%) 208.9698 (

17.5%) Value Propagation

179.6863 ( 15.2%) 0.1215 ( 1.3%) 179.8077 ( 15.1%) 179.7974 (

15.1%) Value Propagation

92.0158 ( 7.8%) 0.2674 ( 3.0%) 92.2832 ( 7.7%) 92.2613 (

7.7%) Combine redundant instructions

72.3330 ( 6.1%) 0.6026 ( 6.7%) 72.9356 ( 6.1%) 72.9210 (

6.1%) Combine redundant instructions

72.2505 ( 6.1%) 0.2167 ( 2.4%) 72.4672 ( 6.1%) 72.4539 (

6.1%) Combine redundant instructions

66.6765 ( 5.6%) 0.3482 ( 3.9%) 67.0247 ( 5.6%) 67.0040 (

5.6%) Combine redundant instructions

65.5029 ( 5.5%) 0.4092 ( 4.5%) 65.9121 ( 5.5%) 65.8913 (

5.5%) Combine redundant instructions

61.8355 ( 5.2%) 0.8150 ( 9.0%) 62.6505 ( 5.2%) 62.6315 (

5.2%) Function Integration/Inlining

54.9184 ( 4.6%) 0.3359 ( 3.7%) 55.2543 ( 4.6%) 55.2332 (

4.6%) Combine redundant instructions

50.2597 ( 4.2%) 0.2187 ( 2.4%) 50.4784 ( 4.2%) 50.4654 (

4.2%) Combine redundant instructions

47.2597 ( 4.0%) 0.3719 ( 4.1%) 47.6316 ( 4.0%) 47.6105 (

4.0%) Global Value Numbering

I don't have an infrastructure to measure the runtime performance

benefits/regression of clang, but I have for `game7`.

I wasn't able to notice any fundamental speedup (at least, not

something that justifies a 2x build-time).

tl;dr:

There are quite a few things to notice:

1) GVN used to be the top pass in the middle-end, in some cases, and

pretty much always in the top-3. This is not the case anymore, but

it's still a pass where we spend a lot of time. This is being worked

on by Daniel Berlin and me) ⚙ D26224 NewGVN so there's

some hope that will be sorted out (or at least there's a plan for it).

2) For clang, we spend 35% more time inside instcombine, and for game7

instcombine seems to largely dominate the amount of time we spend

optimizing IR. I tried to bisect (which is not easy considering the

test takes a long time to run), but I wasn't able to identify a single

point in time responsible for the regression. It seems to be an

additive effect. My wild (or not so wild) guess is that every day

somebody adds a matcher of two because that improves their testcase,

and at some point all this things add up. I'll try to do some

additional profiling but I guess large part of our time is spent

solving bitwise-domain dataflow problems (ComputeKnownBits et

similia). Once GVN will be in a more stable state, I plan to

experiment with caching results.

3) Something peculiar is that we spend 2x time in the inliner. I'm not

familiar with the inliner, IIRC there were some changes to threshold

recently, so any help here will be appreciated (both in reproducing

the results and with analysis).

4) For the last testcase (opt -O2 on large unoptimized chunk of

bitcode) llvm spends 33% of its time in CVP, and very likely in LVI. I

think it's not as lazy as it claims to be (or at least, the way we use

it). This doesn't show up in a full LTO run because we don't run CVP

as part of the default LTO pipeline, but the case shows how LVI can be

a bottleneck for large TUs (or at least how -O2 compile time degraded

on large TUs). I haven't thought about the problem very carefully, but

there seems to be some progress on this front (

https://llvm.org/bugs/show_bug.cgi?id=10584). I can't share the

original bitcode file but I can probably do some profiling on it as

well.

As next steps I'll try to get a more detailed analysis of the

problems. In particular, try to do what Rui did for lld but with more

coarse granularity (every week) to have a chart of the compile time

trend for these cases over the last 6 months, and post here.

I think (I know) some people are aware of the problems I outline in

this e-mail. But apparently not everybody. We're in a situation where

compile time is increasing without real control. I'm happy that Apple

is doing a serious effort to track build-time, so hopefully things

will improve. There are, although, some cases (like adding matchers in

instcombine or knobs) where the compile time regression is hard to

track until it's too late. LLVM as a project tries not to stop people

trying to get things done and that's great, but from time to time it's

good to take a step back and re-evaluate approaches.

The purpose of this e-mail was to outline where we regressed, for

those interested.

Thanks for your time, and of course, feedback welcome!