Background

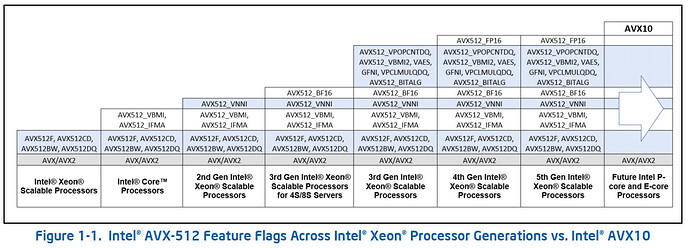

Intel just disclosed AVX10 [spec, technical paper]. The TL;DR is AVX10 is a vector ISA evolution that includes all the capabilities and features of the Intel AVX-512 ISA in a converged version which can run on both E-cores and P-cores.

To enable this feature in LLVM, we have some unique challenges as well as design choices compared to traditional ISA enablement. It is also a peculiar ISA in which no new instructions are introduced in the initial version. It looks more like a re-organization of the AVX512 instructions in LLVM rather than introducing anything brand new here.

Considering the large among of re-organizing work, the preference of each proposal from developer and user, we are requesting for comments before we complete the final implementation. We also welcome collaboration from community to do the re-organization together.

Major challenges

One of the philosophies of AVX10 design is to provide distinct 256-bit and 512-bit capabilities for E-cores and P-cores respectively. But the current organization of AVX512 instructions assumes 512-bit registers are always usable.

The other one is AVX10 is a full set of all the current AVX512 features (except for a few deprecated KNL features and AVX512_VP2INTERSECT), which means we have ~700 instructions, ~5000 intrinsics and hundreds of CPP code that checking predicates that might be affected.

New Options in Clang

-

-mavx10.x

The initial version is AVX10.1, which includes all instructions in the major AVX512 features. But these features cannot be enabled or disabled separately.

The future versions will be numbered in avx10.2, avx10.3 etc. All the instructions in early AVX10 version will be inherited in the future version. -

-mavx10.x-256/-mavx10.x-512

The default vector size is 256-bit if the suffix-256or-512is not specified. These suffixes are used to override the vector size to the specific value. Only the value 256 and 512 are supported in AVX10.

If there are more than one option used in the command line, the last vector size will override the previous ones and compiler will emit a warning about the use.

Ambiguity when mixture use of AVX10 and AVX512 options

Due to the difference of controlling 512-bit related instructions between AVX10 and AVX512, there are two major ambiguities when mixture use of AVX10 and AVX512 options.

-

-mavx10.1-256/512 + -mno-avx512xxx

The combination can be either interpreted as invalid or to disable the overlapped instructions in AVX10 and AVX512XXX. -

-mavx10.1-256 + -mavx512xxx

There’s no real target that only support 256-bit AVX10 and some AVX512 features. Since AVX10 is a converged ISA, the combination can be interpreted as to keep all instructions with 256-bit, or promote them to 512-bit, or promote only the overlapped instructions to 512-bit.

Our internal consensus from GCC and LLVM support team is users are not encouraged to mix AVX10 and AVX512 options in command line and compiler will emit warning for these ambiguous cases, while the code generation might be different based on the implementation.

In GCC’s RFC, they choose to ignore -mno-avx512xxx in case 1) and generate 512-bit related instructions for -mavx512xxx in case 2).

In LLVM the behavior is depended on the design choice we will select.

Design choices

1. Make AVX10 imply all related AVX512 features and exclude all ZMM and 64-bit mask instructions if avx10-512bit feature is not set

Since we use a global avx10-512bit flag for all 512-bit related instructions, the legacy AVX512 features will be affected by the flag as well. In a word, we cannot control 512-bit related instructions for legacy AVX512 features when used together with AVX10.

In this choice, we choose to making AVX10 dominates AVX512 options in driver. In other words, when used with AVX10 options, AVX512 options will always be ignored with a warning.

Pros: Easy to implement and very small code change

Cons: Cannot consistent with GCC’s behavior

2. Split all existing AVX512 features into 2 parts: Scalar+Vec128+Vec256 and Vec512 only and make AVX10.1-256 imply the former

In this choise, we can make AVX10 and AVX512 independent, which means AVX10 and AVX512 options can be used together to turn on or off related instructions. For example, -mavx10-256 -mavx512bw will turn on all 256-bit instructions of AVX10 and 512-bit instructions of AVX512BW, while -mavx10-512 -mno-avx512bw will turn off all instructions in AVX512BW or features imply AVX512BW. We can also match GCC’s behavior through modification in the driver.

Pros: Can emulate GCC’s behavior when use AVX10 with AVX512 options

Cons: A lot of effort to refactor all code involving AVX512 predicates

3. Add “ || hasAVX10()” logic to all current AVX512 predicates excluding 512-bit related part and still make AVX10-512 imply all related AVX512 features

In this choise, we will behave the same as GCC, i.e., -mno-avx512xxx will be ignored and 512-bit instructions will be generated for -mavx512xxx when they are used together with e.g., -mavx10.1-256.

It also requires a lot of refactor effort, besides, there’s an obstacle that we cannot make intrinsics check for both AVX10 and AVX512 features. It’s not easy to modify the front-end to do so according to FE folks. The workaround is to change all definition to macro which also needs a lot of refactor effort.

Preference

I am preferring to design 1, because it requies less effort and less destructive change in the code while inconsistency with GCC in ambiguous scenarios is not a problem. Here is the RFC patch: D157485

I may miss some pential problems in above evaluation. Please raise concerns or give your suggestions. Thanks in advance!

cc @RKSimon, @topperc, @e-kud, @nwg, @efriedma-quic